TLDR

- Discover the most effective AI safety tools helping organizations mitigate risks and ensure responsible AI deployment in 2026.

- Learn how AI governance platforms are becoming essential for compliance with emerging regulations worldwide.

- Understand the role of bias detection tools in creating fairer, more ethical AI systems.

- Explore real-time monitoring solutions that identify and prevent AI-related security threats.

- Find out how explainability tools are making AI decisions more transparent and trustworthy.

What if the AI systems powering your business decisions today could fail catastrophically tomorrow? With AI now embedded in everything from healthcare diagnostics to financial trading algorithms, the stakes have never been higher. According to a Stanford University study, AI incidents and controversies have increased by 26 times since 2012, making AI safety not just a technical concern but a business imperative.

As we navigate through 2026, the conversation around artificial intelligence has shifted dramatically. It’s no longer just about what AI can do it’s about ensuring what AI does is safe, ethical, and aligned with human values. Whether you’re a business leader implementing AI solutions or a developer building the next breakthrough application, understanding AI Safety Tools has become as critical as understanding the AI itself.

This comprehensive guide explores the essential AI safety tools that every organization should know about in 2026. From governance frameworks that ensure regulatory compliance to cutting-edge monitoring systems that detect threats in real-time, we’ll walk you through the technologies keeping AI development on the right track. You’ll discover practical insights into how these tools work, why they matter, and how to implement them effectively in your AI strategy.

Understanding AI Safety: Why It Matters More Than Ever

The rapid acceleration of AI capabilities has brought unprecedented opportunities alongside equally significant risks. AI systems now make decisions affecting millions of lives daily, from loan approvals to medical diagnoses, making the margin for error increasingly narrow. The World Economic Forum’s 2026 Global Risks Report identifies AI-related risks as among the top ten threats facing humanity over the next decade, emphasizing the urgent need for robust safety measures.

AI safety encompasses multiple dimensions that go beyond simple technical fixes. It includes ensuring systems behave as intended, preventing malicious use, maintaining privacy protections, eliminating harmful biases, and creating accountability mechanisms. The challenge lies in the fact that modern AI systems, particularly large language models and deep learning networks, often operate as “black boxes” where even their creators struggle to fully understand or predict their behavior.

Recent high-profile incidents have underscored these concerns. Autonomous vehicles have encountered safety-critical failures, facial recognition systems have demonstrated racial biases leading to wrongful arrests, and AI-powered trading algorithms have triggered market instabilities. Each incident reinforces the critical importance of implementing comprehensive AI Safety Tools before deployment rather than responding reactively after problems emerge.

The regulatory landscape has evolved significantly to address these concerns. The European Union’s AI Act, now in full enforcement in 2026, categorizes AI systems by risk level and mandates specific safety requirements for high-risk applications. Similarly, the United States has introduced sector-specific AI safety guidelines, while countries like China and Singapore have implemented their own frameworks. Organizations operating across borders must navigate this complex regulatory environment, making standardized AI safety tools more valuable than ever.

Key areas where AI safety tools provide critical protection include:

- Algorithmic bias detection – Identifying and correcting unfair treatment of protected groups

- Adversarial attack prevention – Defending against manipulated inputs designed to fool AI systems

- Privacy preservation – Ensuring AI systems don’t leak sensitive training data or user information

- Robustness testing – Verifying AI performance across diverse scenarios and edge cases

- Explainability enhancement – Making AI decisions interpretable and auditable

At Etagicon, we understand that navigating this complex landscape requires staying informed about the latest developments in AI safety. The tools we’ll explore in the following sections represent the current state-of-the-art in protecting organizations and users from AI-related risks while enabling responsible innovation.

Essential AI Governance and Compliance Platforms

AI governance platforms have emerged as the foundational layer for organizational AI safety strategies. These comprehensive tools provide centralized management, oversight, and compliance capabilities across an organization’s entire AI portfolio. As regulations tighten globally, these platforms have evolved from nice-to-have solutions to mission-critical infrastructure.

Leading AI governance platforms in 2026 include:

1. IBM watsonx.governance – This enterprise-grade platform offers end-to-end AI lifecycle management with automated compliance checking against multiple regulatory frameworks. It provides model risk management, bias detection, and comprehensive audit trails that satisfy regulatory requirements. Organizations using watsonx.governance report 40% faster compliance verification and significantly reduced audit preparation time.

2. Microsoft Azure AI Responsible AI Dashboard – Integrated directly into Azure’s AI services, this dashboard provides real-time monitoring of fairness, reliability, and transparency metrics. It automatically generates compliance reports formatted for different regulatory regimes and offers remediation recommendations when issues are detected. The platform’s strength lies in its seamless integration with existing Azure workflows.

3. Google Cloud AI Platform Model Monitoring – Google’s solution excels at detecting model drift and performance degradation over time. It continuously compares production model behavior against baseline expectations and alerts teams when anomalies occur. The platform has proven particularly effective for organizations deploying multiple AI models simultaneously.

4. DataRobot AI Governance Platform – This platform specializes in model documentation and lineage tracking, creating comprehensive records of data sources, training processes, and deployment decisions. According to Gartner’s 2026 AI Governance Report, DataRobot leads in documentation capabilities, which proves invaluable during regulatory audits.

These AI safety monitoring tools typically include several core capabilities:

- Model inventory and cataloging – Maintaining a complete registry of all AI models in development and production

- Risk assessment frameworks – Automated evaluation of models against organizational risk criteria

- Policy enforcement – Ensuring all models comply with defined standards before deployment

- Audit trail generation – Creating immutable records of model decisions and changes

- Compliance mapping – Aligning model characteristics with specific regulatory requirements

The return on investment for governance platforms extends beyond regulatory compliance. Organizations implementing these tools report improved collaboration between technical and business teams, faster model deployment cycles due to streamlined approvals, and reduced legal exposure. A Forrester study found that enterprises using comprehensive AI governance platforms experienced 35% fewer compliance incidents and saved an average of $2.3 million annually in potential regulatory fines and remediation costs.

When selecting a governance platform, organizations should evaluate integration capabilities with existing AI development tools, support for their specific regulatory requirements, scalability to accommodate growing AI portfolios, and the quality of documentation and reporting features. The right platform becomes a strategic enabler rather than a compliance checkbox, allowing organizations to innovate with confidence while maintaining appropriate safeguards.

Bias Detection and Fairness Testing Tools

Algorithmic bias remains one of the most pressing ethical concerns in AI deployment. Biased AI systems can perpetuate and amplify existing societal inequalities, leading to discriminatory outcomes in critical domains like hiring, lending, criminal justice, and healthcare. The sophistication of bias detection tools has advanced significantly, moving beyond simple demographic parity checks to nuanced fairness assessments.

IBM AI Fairness 360 is an open-source toolkit that has become an industry standard for fairness assessment. It provides over 70 fairness metrics and 10 bias mitigation algorithms, allowing organizations to evaluate and improve their models across multiple fairness definitions. The toolkit supports various fairness criteria including demographic parity, equalized odds, and individual fairness, recognizing that different contexts require different fairness approaches.

Aequitas from the University of Chicago’s Center for Data Science and Public Policy offers specialized capabilities for auditing machine learning models used in public policy and social impact applications. It provides intuitive visualizations that make fairness metrics accessible to non-technical stakeholders, facilitating important conversations between technical teams and policy makers. Organizations working in sensitive domains like criminal justice and social services find Aequitas particularly valuable.

Fairlearn is Microsoft’s open-source toolkit focused on assessing and improving fairness in machine learning models. Its strength lies in its integration with scikit-learn, Python’s most popular machine learning library, making it accessible to data scientists already working in that ecosystem. Fairlearn provides both assessment tools to identify fairness issues and mitigation algorithms to address them without sacrificing too much model performance.

These AI safety tools employ several approaches to detect and mitigate bias:

- Pre-processing techniques – Transforming training data to remove bias before model training begins

- In-processing methods – Incorporating fairness constraints directly into the model training process

- Post-processing approaches – Adjusting model outputs to achieve fairness criteria without retraining

- Intersectional analysis – Examining fairness across multiple protected attributes simultaneously

- Counterfactual fairness testing – Evaluating whether predictions would change if protected attributes were different

Real-world applications demonstrate the critical importance of these tools. A major financial institution using IBM AI Fairness 360 discovered that their loan approval model exhibited a 12% disparity in approval rates between equally qualified applicants from different demographic groups. After implementing bias mitigation techniques, they reduced this disparity to below 2% while maintaining overall model accuracy, demonstrating that fairness and performance aren’t mutually exclusive.

Healthcare organizations have found particular value in fairness testing for diagnostic AI systems. A research study published in Nature Medicine revealed that many medical AI systems exhibit performance disparities across racial groups due to biased training data. Organizations using comprehensive fairness testing tools identified these issues during development rather than after deployment, preventing potentially harmful outcomes for underserved populations.

The challenge with bias detection extends beyond technical implementation to organizational culture. According to research from the AI Now Institute, successful bias mitigation requires not just sophisticated tools but also diverse development teams, clear accountability structures, and ongoing monitoring of deployed systems. The most effective organizations combine advanced AI Safety Tools with human oversight and stakeholder engagement to ensure fairness throughout the AI lifecycle.

For organizations serious about ethical AI, investing in comprehensive bias detection capabilities isn’t optional it’s essential for maintaining public trust, avoiding legal liability, and ensuring AI systems serve all users equitably. To explore more insights on responsible AI implementation, check out our collection of articles at Etagicon’s blog section.

AI Security and Adversarial Defense Systems

As AI systems become more prevalent in security-critical applications, they’ve also become attractive targets for malicious actors. Adversarial attacks carefully crafted inputs designed to fool AI models pose serious risks ranging from autonomous vehicle accidents to security system bypasses. The field of AI security has matured rapidly, producing sophisticated defense mechanisms that organizations can deploy to protect their AI investments.

Adversarial Robustness Toolbox (ART) from IBM Research leads the field in defending AI models against adversarial attacks. This comprehensive library provides both attack simulations and defense mechanisms across various AI frameworks including TensorFlow, PyTorch, and Keras. ART enables organizations to proactively test their models against known attack vectors and implement certified defenses that provide mathematical guarantees of robustness within certain bounds.

CleverHans is an open-source library developed by researchers at Google Brain that specializes in adversarial example generation and defense evaluation. It’s particularly valuable for organizations wanting to understand their model vulnerabilities before deployment. CleverHans includes implementations of state-of-the-art attacks like the Fast Gradient Sign Method (FGSM), Projected Gradient Descent (PGD), and Carlini-Wagner attacks, allowing security teams to conduct comprehensive penetration testing of their AI systems.

Microsoft’s Counterfit takes a different approach, providing an automation framework for security testing of AI systems. It allows security teams to conduct adversarial assessments at scale, testing multiple models simultaneously and generating comprehensive security reports. Organizations with large AI portfolios find Counterfit invaluable for maintaining security posture across their entire model inventory.

Modern AI security tools address multiple attack vectors:

- Evasion attacks – Inputs modified to cause misclassification during inference

- Poisoning attacks – Corrupted training data designed to compromise model behavior

- Model inversion – Techniques that attempt to extract sensitive training data from deployed models

- Model stealing – Attacks that replicate model functionality without access to training data

- Backdoor attacks – Hidden triggers embedded during training that activate malicious behavior

According to a MIT Technology Review analysis, adversarial attacks have evolved significantly, with attackers developing techniques that work in physical environments, not just digital ones. Researchers demonstrated that placing specific stickers on stop signs could cause autonomous vehicles to misclassify them, highlighting the real-world implications of AI security vulnerabilities.

Defense strategies have evolved in parallel with attack sophistication. Adversarial training, where models are trained on both normal and adversarial examples, has proven effective at improving robustness. Defensive distillation reduces model sensitivity to small input perturbations. Input transformation techniques detect and neutralize adversarial perturbations before they reach the model. Organizations typically implement multiple defense layers rather than relying on a single approach.

Financial services firms have been early adopters of AI security tools due to the high stakes involved. A major credit card company implemented comprehensive adversarial testing using ART before deploying their fraud detection AI. They discovered and addressed several vulnerabilities that could have allowed fraudsters to bypass their system, potentially saving millions in losses.

The healthcare sector faces particularly serious AI security concerns. Medical imaging AI systems must be protected against adversarial attacks that could cause misdiagnosis. Several hospitals now use adversarial defense systems to verify that their diagnostic AI isn’t vulnerable to manipulation, ensuring patient safety alongside diagnostic accuracy.

For organizations deploying AI in security-sensitive contexts, these AI Safety Tools represent essential infrastructure. The cost of implementing robust adversarial defenses is minimal compared to the potential consequences of successful attacks, whether measured in financial losses, safety incidents, or reputational damage.

Explainability and Interpretability Platforms

The “black box” nature of modern AI systems poses significant challenges for trust, accountability, and regulatory compliance. Explainability tools have emerged as critical AI Safety Tools that make model decisions transparent and interpretable to human stakeholders. As regulations increasingly require explanations for automated decisions particularly in high-stakes domains these tools have transitioned from research curiosities to business necessities.

SHAP (SHapley Additive exPlanations) has become the gold standard for model-agnostic explanations. Based on game theory, SHAP assigns each feature an importance value for a particular prediction, showing exactly how different inputs contributed to the model’s decision. Its mathematical rigor provides explanations that satisfy both technical teams and regulators. Organizations across industries use SHAP to understand everything from loan rejection reasons to medical diagnosis factors.

LIME (Local Interpretable Model-agnostic Explanations) offers a complementary approach, explaining individual predictions by approximating the complex model locally with an interpretable one. LIME excels at generating intuitive explanations for non-technical stakeholders, making it particularly valuable in customer-facing applications where organizations need to justify AI decisions to end users.

Fiddler AI provides an enterprise-grade explainability platform that combines multiple explanation techniques with monitoring and governance capabilities. It offers both global explanations (understanding overall model behavior) and local explanations (understanding individual predictions), presented through intuitive dashboards accessible to business users. According to Forrester’s analysis, Fiddler leads in providing explanations that satisfy regulatory requirements across multiple jurisdictions.

Arize AI specializes in production ML observability, providing real-time explanations for model predictions in deployed systems. Its strength lies in connecting model behavior to business outcomes, helping organizations understand not just how their models work but how they impact key performance indicators. Companies using Arize report significantly faster debugging and model improvement cycles.

Effective explainability tools provide multiple perspectives on model behavior:

- Feature importance rankings – Identifying which inputs most influence model decisions

- Counterfactual explanations – Showing what would need to change for a different outcome

- Concept activation vectors – Understanding what high-level concepts the model has learned

- Attention visualization – For neural networks, showing which input regions received focus

- Decision rules extraction – Generating human-readable rules approximating model logic

The practical value of explainability extends beyond compliance. A healthcare provider using SHAP to explain their patient readmission prediction model discovered that it heavily weighted a seemingly irrelevant administrative code. Investigation revealed a data quality issue that would have gone undetected without explainability tools, potentially leading to poor clinical decisions.

Financial institutions have found explainability tools essential for meeting regulatory requirements while maintaining competitive model performance. The Equal Credit Opportunity Act requires lenders to provide specific reasons for credit denials. Banks using advanced explainability tools can now provide meaningful, legally compliant explanations while using sophisticated AI models that outperform traditional scoring methods.

Research published in Harvard Business Review demonstrates that explainability improves human-AI collaboration. When users understand AI reasoning, they better calibrate their trust relying on the AI when it’s likely correct and overriding it when necessary. Organizations implementing explainability tools report better outcomes than those using either fully autonomous AI or purely manual processes.

The regulatory landscape increasingly mandates explainability. The EU’s AI Act requires “meaningful information about the logic involved” for high-risk AI systems. Similar requirements are emerging in the United States and Asia. Organizations that implement robust explainability capabilities now will be better positioned as regulations continue evolving.

At Etagicon, we believe explainability represents the bridge between AI capability and AI trust. The most sophisticated models deliver little value if stakeholders don’t trust or understand them. By investing in comprehensive explainability tools, organizations can unlock both the performance advantages of advanced AI and the trust necessary for widespread adoption.

Real-Time AI Monitoring and Anomaly Detection

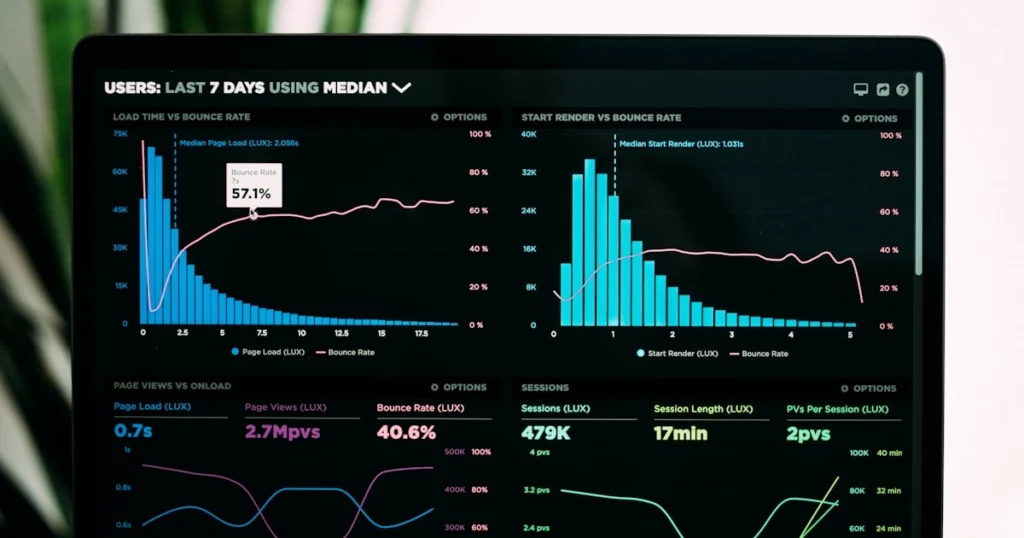

Deploying an AI model represents just the beginning of its lifecycle. Production AI systems face numerous challenges including data drift, concept drift, performance degradation, and unexpected edge cases. Real-time monitoring tools have become essential AI Safety Tools that detect issues before they impact users or business operations.

Evidently AI provides comprehensive monitoring for machine learning models with particular strength in drift detection. It automatically identifies when the statistical properties of production data deviate from training data, triggering alerts before model performance degrades significantly. Organizations using Evidently report catching drift-related issues an average of three weeks earlier than traditional threshold-based monitoring.

WhyLabs offers an observability platform specifically designed for AI/ML systems, providing statistical profiling and anomaly detection without requiring access to raw data. This privacy-preserving approach makes it particularly valuable for organizations handling sensitive information. WhyLabs’ lightweight agents monitor models continuously with minimal performance overhead, making it suitable even for latency-sensitive applications.

Aporia combines monitoring with automated root cause analysis, not only detecting when something goes wrong but helping teams understand why. When alerts trigger, Aporia provides detailed diagnostics including feature-level analysis, segment performance comparison, and suggested remediation approaches. This reduces the time from detection to resolution significantly.

Effective monitoring encompasses multiple dimensions:

- Data quality monitoring – Detecting null values, outliers, schema changes, and feature distribution shifts

- Model performance tracking – Monitoring accuracy, precision, recall, and domain-specific metrics in production

- Prediction drift detection – Identifying changes in model output distributions that may indicate problems

- Latency and throughput monitoring – Ensuring models meet performance requirements

- Fairness metric tracking – Continuously monitoring for emerging bias in production systems

Real-world examples illustrate the critical importance of monitoring. An e-commerce company using WhyLabs detected sudden drift in their product recommendation model. Investigation revealed that a supplier had changed product categorization, causing the model to recommend inappropriate items. Because monitoring caught this immediately, the company avoided significant revenue loss and customer dissatisfaction.

A healthcare provider monitoring their patient risk stratification model discovered subtle performance degradation over several months. Analysis revealed that demographic shifts in their patient population had gradually reduced model accuracy for newly represented groups. They retrained the model with recent data, restoring performance before clinical outcomes were impacted.

According to research from Stanford University, the majority of production ML systems experience significant performance degradation within the first year of deployment without active monitoring and maintenance. Organizations that implement comprehensive monitoring maintain stable model performance significantly longer and resolve issues faster when they do occur.

Advanced monitoring capabilities now include:

- Automated alerting with configurable thresholds and multi-channel notifications

- Segment analysis revealing performance differences across user groups or data subsets

- Correlation detection identifying relationships between drift and performance changes

- Historical tracking enabling trend analysis and proactive intervention

- Integration with incident response systems automatically triggering remediation workflows

The economics of monitoring favor proactive investment. A financial services firm calculated that their monitoring system, costing approximately $50,000 annually, prevented an estimated $2.3 million in losses by catching model performance issues before they significantly impacted operations. The return on investment exceeded 40x, not including avoided reputational damage.

Modern AI Safety Tools increasingly incorporate automated remediation capabilities alongside detection. When specific types of drift occur, systems can automatically trigger model retraining, switch to backup models, or adjust decision thresholds while alerting human operators. This reduces mean time to resolution and minimizes business impact.

Organizations serious about production AI reliability should view monitoring as non-negotiable infrastructure, comparable to traditional application performance monitoring. The questions aren’t whether to monitor but rather how comprehensively and how to best integrate monitoring into existing operational workflows.

Privacy-Preserving AI Technologies

Privacy concerns represent a major barrier to AI adoption in sensitive domains like healthcare, finance, and personal services. Privacy-preserving AI technologies have matured significantly, enabling organizations to leverage AI’s power while protecting sensitive information. These AI Safety Tools satisfy both regulatory requirements and user expectations around data protection.

Differential Privacy has emerged as the mathematical gold standard for privacy protection. Companies like Apple and Google use differential privacy in their AI systems to extract insights from user data while providing formal guarantees that individual information remains protected. Tools like OpenMined’s PySyft and Google’s TensorFlow Privacy enable developers to implement differential privacy in their models, adding carefully calibrated noise that preserves overall patterns while obscuring individual records.

Federated Learning represents a paradigm shift in how AI systems are trained, allowing models to learn from distributed data without that data ever leaving its origin. Rather than centralizing sensitive data for training, federated learning sends model updates instead, aggregating them to improve the global model. Google pioneered this approach for keyboard prediction, training on millions of devices without collecting users’ typing data. Healthcare consortiums now use federated learning to develop diagnostic models across institutions without sharing patient records.

Homomorphic Encryption enables computation on encrypted data, allowing AI models to process sensitive information without ever decrypting it. While computationally expensive, homomorphic encryption has become practical for specific applications where privacy is paramount. Financial institutions use it for collaborative fraud detection, enabling them to benefit from shared intelligence without exposing their customers’ transaction data.

Secure Multi-Party Computation (MPC) allows multiple parties to jointly compute functions over their private data while keeping that data secret. For AI applications, this enables collaborative model training where each participant contributes data but no single party can access others’ information. Pharmaceutical companies use MPC to collaborate on drug discovery research while protecting proprietary compound data.

The practical applications of privacy-preserving AI span numerous industries:

- Healthcare – Federated learning enables multi-hospital collaboration on diagnostic models while satisfying HIPAA requirements

- Finance – Differential privacy allows banks to share fraud patterns without exposing customer details

- Retail – Privacy-preserving analytics extract shopping behavior insights without tracking individuals

- Telecommunications – Federated learning improves network optimization using device data that never leaves users’ phones

- Government – MPC enables census data analysis while protecting citizen privacy

According to research from MIT, organizations implementing privacy-preserving AI technologies experience higher user trust and engagement. A study of healthcare apps found that those transparently using federated learning had 34% higher user retention than comparable apps requiring data upload, demonstrating that privacy protection translates directly to business value.

The regulatory environment increasingly favors privacy-preserving approaches. The GDPR’s data minimization principle and requirement for privacy by design align naturally with technologies like federated learning and differential privacy. Organizations implementing these technologies find compliance easier and less costly than traditional approaches requiring extensive data protection measures.

Leading privacy-preserving AI platforms include:

- OpenMined – An open-source community developing privacy-preserving AI tools including PySyft for federated learning and secure computation

- Intel’s Private AI Framework – Hardware-accelerated privacy-preserving computation leveraging Intel SGX secure enclaves

- NVIDIA FLARE – An enterprise-grade federated learning platform designed for healthcare and financial services

- Flower (flwr) – A framework simplifying federated learning implementation across diverse machine learning frameworks

Challenges remain in widespread adoption. Privacy-preserving techniques often require additional computational resources and can slightly reduce model accuracy. Organizations must balance these tradeoffs against privacy benefits. However, ongoing research continues improving the efficiency and effectiveness of privacy-preserving approaches, making them increasingly practical for mainstream applications.

The future of AI safety inevitably involves stronger privacy protections. Organizations that invest in privacy-preserving AI Safety Tools now position themselves advantageously as privacy regulations tighten and user expectations rise. Moreover, the competitive advantage of accessing distributed data through federated learning can outweigh the technical challenges involved.

For more insights on implementing cutting-edge AI technologies responsibly, explore Etagicon’s comprehensive resources.

Frequently Asked Questions (FAQs)

What are AI safety tools and why are they important?

AI safety tools are technologies designed to ensure AI systems operate reliably, ethically, and securely. They’re important because AI increasingly makes decisions affecting people’s lives, and without proper safeguards, systems can produce harmful, biased, or unpredictable outcomes.

How do bias detection tools work in AI systems?

Bias detection tools analyze AI models and their outputs across different demographic groups to identify unfair treatment. They use statistical measures to quantify disparities and provide techniques to reduce bias while maintaining model performance.

What is the difference between explainability and interpretability in AI?

Interpretability refers to understanding how a model works internally, while explainability focuses on understanding why specific decisions were made. Both are crucial for building trust and meeting regulatory requirements in AI deployment.

Are AI safety tools required by law?

In many jurisdictions, yes. The EU’s AI Act mandates specific safety measures for high-risk AI systems, and similar regulations are emerging globally. Even where not legally required, implementing safety tools reduces liability and builds user trust.

Can small organizations afford AI safety tools?

Yes, many effective AI safety tools are open-source and free to use. While enterprise platforms offer additional features, organizations of any size can implement basic safety measures like bias testing and monitoring without significant investment.

How often should AI models be monitored after deployment?

Continuously. AI models can degrade quickly as data patterns change, so real-time monitoring is ideal. At minimum, models should be evaluated weekly, with critical systems monitored even more frequently to catch issues before they impact users.

What’s the relationship between AI safety and AI security?

AI safety focuses on ensuring systems behave as intended and don’t cause unintended harm, while AI security protects against malicious attacks. Both are essential components of responsible AI deployment and often use complementary tools and techniques.

Do privacy-preserving AI techniques reduce model accuracy?

Often slightly, but the impact is usually minimal with proper implementation. Technologies like differential privacy add controlled noise that protects individual privacy while preserving overall patterns, typically reducing accuracy by only 1-3 percentage points.

Conclusion

As artificial intelligence continues reshaping industries and society, the tools we use to ensure its safety have become as important as the AI itself. The AI Safety Tools explored in this guide from comprehensive governance platforms to sophisticated bias detection systems, from adversarial defense mechanisms to privacy-preserving technologies represent the current state-of-the-art in responsible AI development and deployment.

The landscape of AI safety is no longer theoretical or aspirational. Organizations across sectors are actively implementing these tools, driven by regulatory requirements, competitive pressures, and genuine commitment to ethical innovation. Those who invest in comprehensive safety measures today won’t just avoid the pitfalls of irresponsible AI they’ll gain competitive advantages through increased user trust, faster regulatory approvals, and more robust systems that deliver sustainable value over time.

At Etagicon, we believe the future belongs to organizations that balance innovation with responsibility. The AI Safety Tools available in 2026 make this balance achievable, providing practical solutions to previously intractable challenges. Whether you’re just beginning your AI journey or optimizing existing deployments, these tools offer pathways to building AI systems that are not only powerful and efficient but also safe, fair, and trustworthy. The question isn’t whether to implement AI safety measures but how comprehensively and how quickly your organization can adopt them. For more cutting-edge insights on artificial intelligence, technology trends, and digital transformation, explore more blogs at Etagicon, where we break down complex topics into actionable knowledge that drives real-world results.